Facial Feature Enhancement for Immersive Real-Time Avatar-Based Sign Language Communication using Personalized CNNs

Kristoffer Waldow, Arnulph Fuhrmann and Daniel Roth

In:Proceedings of 31th IEEE Virtual Reality Conference (VR ’24), Orlando, USA

Abstract:

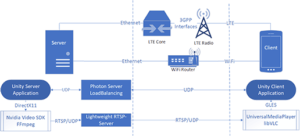

Facial recognition is crucial in sign language communication. Especially for virtual reality and avatar-based communication, increased facial features have the potential to integrate the deaf and hard-of-hearing community to improve speech comprehension and empathy. But, current methods lack precision in capturing nuanced expressions. To address this, we present a real-time solution that utilizes personalized Convolutional Neural Networks (CNNs) to capture intricate facial details, such as tongue movement and individual puffed cheeks. Our system’s classification models offer easy expansion and integration into existing facial recognition systems via UDP network broadcasting.