Intersection-free mesh decimation for high resolution cloth models

Ursula Derichs, Martin Mišiak and Arnulph Fuhrmann

Virtuelle und Erweiterte Realität – 17. Workshop der GI-Fachgruppe VR/AR, 2020, Trier, Germany

Ursula Derichs, Martin Mišiak and Arnulph Fuhrmann

Virtuelle und Erweiterte Realität – 17. Workshop der GI-Fachgruppe VR/AR, 2020, Trier, Germany

Sven Hinze, Martin Mišiak and Arnulph Fuhrmann

Virtuelle und Erweiterte Realität – 17. Workshop der GI-Fachgruppe VR/AR, 2020, Trier, Germany

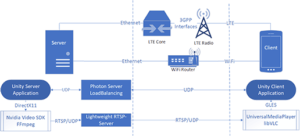

In this paper, a remote rendering system for an AR app based on Unity is presented. The system was implemented for an edge server, which is located within the network of the mobile network operator.

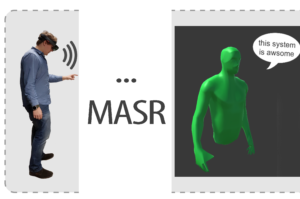

We propose an easy to integrate Automatic Speech Recognition and textual visualization extension for an avatar-based MR remote collaboration system that visualizes speech via spatial floating speech bubbles. In a small pilot study, we achieved word accuracy of our extension of 97% by measuring the widely used word error rate.

This paper investigates the effects of normal mapping on the perception of geometric depth between stereoscopic and non-stereoscopic views.

Kristoffer Waldow, Arnulph Fuhrmann and Stefan M. Grünvogel In: Proceedings…

We investigate the influence of four different audio representations on visually induced self-motion (vection). Our study followed the hypothesis, that the feeling of visually induced vection can be increased by audio sources while lowering negative feelings such as visually induced motion sickness.

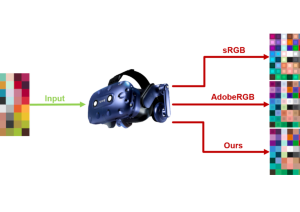

When rendering images in real-time, shading pixels is a comparatively expensive

operation. Especially for head-mounted displays, where separate images are rendered for

each eye and high frame rates need to be achieved. Upscaling algorithms are one possibility

of reducing the pixel shading costs. Four basic upscaling algorithms are implemented in a

VR rendering system, with a subsequent user study on subjective image quality. We find

that users preferred methods with a better contrast preservation.

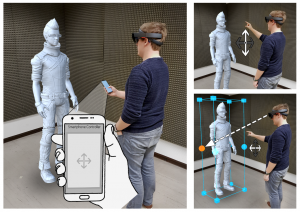

In this paper, we present a Mixed Reality telepresence system that allows the connection of multiple AR or VR devices to create a shared virtual environment by using the simple MQTT networking protocol. It follows a subscribe-publish pattern for reliable and easy platform independent integration. Therefore, it is possible to realize different clients that handle communication and allow remote collaboration. To allow embodied natural human interaction, the system maps the human interaction channels, gestures, gaze and speech, to an abstract stylized avatar by using an upper body inverse kinematic approach. This setup allows spatially separated persons to interact with each other via an avatar-mediated communication.

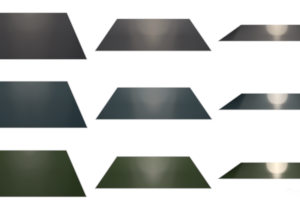

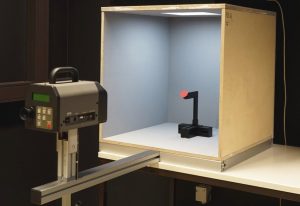

The simulation of light-matter interaction is a major challenge in computer graphics. Particularly challenging is the modelling of light-matter interaction of rough surfaces, which contain several different scales of roughness where many different scattering phenomena take place. There are still appearance critical phenomena that are weakly approximated or even not included at all by current BRDF models. One of these phenomena is the reddening effect, which describes a tilting of the reflectance spectra towards long wavelengths especially in the specular reflection. The observation that the reddening effect takes place on rough surfaces is new and the characteristics and source of the reddening effect have not been thoroughly researched and explained. Furthermore, it was not even clear whether the reddening really exists or the observed effect resulted from measurement errors. In this work we give a short introduction to the reddening effect and show that it is indeed a property of the material reflectance function, and does not originate from measurement errors or optical aberrations.

Um die Attraktivität des Antizipations- und Reaktionstrainings für jugendliche Torwarte zu erhöhen war es das Ziel eine sportartspezifische Umgebung in virtueller Realität (VR) zu entwickeln.

In Augmented Reality, interaction with the environment can be achieved with a number of different approaches. In current systems, the most common are hand and gesture inputs. However experimental applications also integrated smartphones as intuitive interaction devices and demonstrated great potential for different tasks. One particular task is constrained object manipulation, for which we conducted a user study. In it we compared standard gesture-based approaches with a touch-based interaction via smartphone. We found that a touch-based interface is significantly more efficient, although gestures are being subjectively more accepted. From these results we draw conclusions on how smartphones can be used to realize modern interfaces in AR.

The ability to localize a device or user precisely within a known space, would allow many use cases on the context of location-based augmented reality. We propose a localization service based on sparse visual information using ARCore, a state-of-the-art augmented reality platform for mobile devices.

In this work, we acquired a set of precisely and spectrally resolved ground truth data. It consists of the precise description of a new developed reference scene including isotropic BRDFs of 24 color patches, as well as the reference measurements of all patches under 13 different angles inside the reference scene.