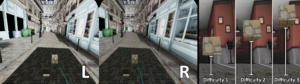

Metaverse in Civil Engineering

N. Bartels, A. Fuhrmann, R. Kasper, P. Di Biase, K. Hahne: Metaverse in Civil Engineering

In: 4th IEEE German Education Conference (GECon), 2025

N. Bartels, A. Fuhrmann, R. Kasper, P. Di Biase, K. Hahne: Metaverse in Civil Engineering

In: 4th IEEE German Education Conference (GECon), 2025

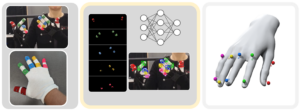

Kristoffer Waldow, Constantin Kleinbeck, Arnulph Fuhrmann and Daniel Roth

In: IEEE Transactions on Visualization and Computer Graphics (2025)

🏆Honorable Mention Best Paper Award

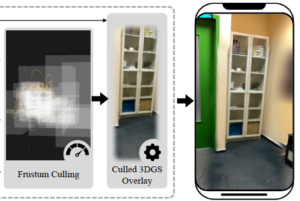

Kristoffer Waldow, Jonas Scholz, Arnulph Fuhrmann

In: Proceedings of 32th IEEE Virtual Reality Conference (VR ’25), Saint-Malo, France

Sina Rüter, Kristoffer Waldow, Niels Bartels and Arnulph Fuhrmann

Conference: 24th International Conference on Construction Applications of Virtual Reality (CONVR 2024),Western Sydney University, NSW, Australia

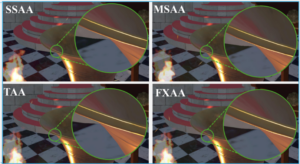

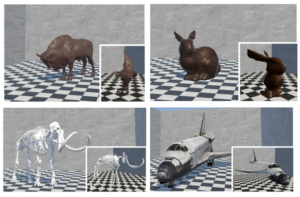

Olaf Clausen, Arnulph Fuhrmann, Martin Mišiak, Marc Erich Latoschik and Ricardo Marroquim

VRST ’24: Proceedings of the 30th ACM Symposium on Virtual Reality Software and Technology

Waldow, Kristoffer; Scholz, Jonas; Misiak, Martin; Fuhrmann, Arnulph; Roth, Daniel; Latoschik and Marc Erich Latoshik

Virtuelle und Erweiterte Realität – 21. Workshop der GI-Fachgruppe VR/AR, 2024, , Germany

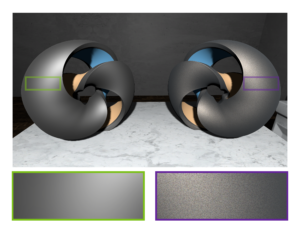

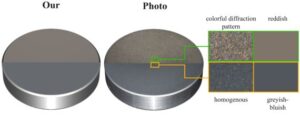

Olaf Clausen, Y Chen, Arnulph Fuhrmann and Ricardo Marroquim

MANER Conference – Material Appearance Network for Education and Research (2024), Workshop on Material Appearance Modeling

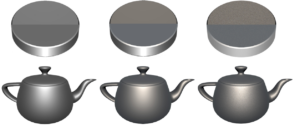

Olaf Clausen, Martin Mišiak, Arnulph Fuhrmann, Ricardo Marroquim and Marc Erich Latoschik

Journal of Computer Graphics Techniques (JCGT), vol. 13, no. 1, 1-27, 2024

Kristoffer Waldow, Arnulph Fuhrmann and Daniel Roth

Proceedings of 31th IEEE Virtual Reality Conference (VR ’24), Orlando, USA

Kristoffer Waldow, Lukas Decker, Martin Misiak, Arnulph Fuhrmann, Daniel Roth, Marc Erich Latoschik

In:Proceedings of 31th IEEE Virtual Reality Conference (VR ’24), Orlando, USA

🏆Best Poster Award

Kristoffer Waldow, Arnulph Fuhrmann, Daniel Roth

6th IEEE International Conference on Artificial Intelligence & extended and Virtual Reality 2024 (AIxVR ’24)

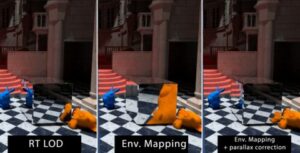

Martin Mišiak, Tom Müller, Arnulph Fuhrmann and Marc Erich Latoschik

Virtuelle und Erweiterte Realität – 20. Workshop der GI-Fachgruppe VR/AR, 2023, Köln, Germany

Martin Mišiak, Arnulph Fuhrmann, Marc Erich Latoschik

ACM Symposium on Applied Perception 2023 (SAP ’23)

Olaf Clausen, Yang Chen, Arnulph Fuhrmann and Ricardo Marroquim

Computer Graphics Forum, 42: 245-260.

Martin Mišiak, Arnulph Fuhrmann, Marc Erich Latoschik

Proceedings of the 27th ACM Symposium on Virtual Reality Software and Technology. 2021

Nigel Frangenberg, Kristoffer Waldow and Arnulph Fuhrmann

Proceedings of 28th IEEE Virtual Reality Conference (VR ’21), Lisbon, Portugal